Images are normally stored in two-dimensional format where each pixel is inserted into an array. You understand that the image is so great to be written to disk: we have a picture of 1000×1000 pixels at 24 bits (each pixel is represented by 24 bits of color that is 16 million), to store it on a purely theoretical, we need 24 x 1000 x 1000 = 24,000,000 bits or 3,000,000 bytes or 2,930 KB or 2.86 MB, but only theoretically, because then it also needs a bitmap header and then the files must write additional information and then go up much more.

Then, images must be compressed and the compression methods are:

- RLE (Run Length Encoding)

- LZW (Lempel-Ziv coding-Welch)

- CCITT (International Telegraph and Telephone Consultative Committee)

- DCT (Discrete Cosine Transform, the scheme known as JPEG stands for Joint Photographic Experts Group)

First 3 are lossless and that is without loss of information in the final image is then compressed as the original. The last is that instead of lossy with loss of information from the original, but not visible or near to the observer.

Images are compressed on so-called redundant data with a mathematical algorithm, for example, instead of counting x += 1 to 50 times it’s much better to do 50 x 1. From this was born a different way of thinking about image compression, RLE lossless format, used in the vector and those in black and white, like in fax, because the captured images are not linear and methods that are able to compress well are only lossy. Among others, we can list even GIF (Graphics Interchange Format) and TIFF (Tag Image File Format) derived from the evolution of the LZW compression, but not suitable for images captured on the ground that are lossless, and then you can squeeze just a picture, is preferable to lossy JPEG. With LZW encodings you can compress an image with a ratio from 2:1 to 5:1, rather than lossy JPEG with 10:1 to 20:1 then 10 times.

JPEG compression is done in four phases: transform the space of representation of color and color subsampling than the perceived brightness that is much more than that of color the human eye.

Extraction of the image blocks of 8×8 pixels and calculating values of the transform (DCT) as work on the blocks of bright spots is less destructive than working on individual pixels.

Coding of coefficients of each block of 64 (8×8) floating point data (floating point) rounded to integers.

Entropy coding of the overall sequence of data. For u = 0 and v = 0 the value of FDTC is proportional to the average of the pixel block and is called the DC component, the remaining 63 coefficients are called AC terms.

Decompression takes place in reverse, but the data of section 3 are no longer recoverable because we have float rather then integers, here is the loss of data in the image.

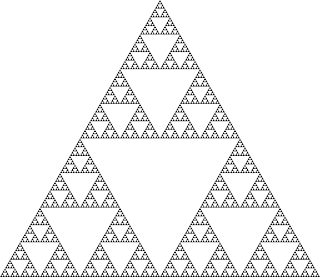

With this type of compression it takes an average time to compress images and a medium time to decompress. There is also another type of compression, IFS (Iterated Function System) which are then fractal initial data from a geometric figure (a polygon) which got deeper and deeper you get a graphical representation of the whole fractal described by the specific IFS. Such as the Sierpinski triangle.

Only problem with this approach is that it takes a long time to compress the image, offset by a very high speed to decompress, that is important because it is a time compression and decompression, every time I have to see the image.

In this article I have described the main methods of image compression, hoping that it has filled some gaps that you had on the formats to store your photos or your designs.